When I set out to build a fresh produce delivery MVP from scratch, performance wasn't just a nice-to-have — it was mission-critical. The platform needed to handle real-time inventory updates, order processing, and multi-tenant shop management with lightning-fast response times.

Here's how I achieved sub-70ms response times in staging and what I'm expecting when production traffic hits.

The Tech Stack Behind the Speed

Rust + Axum: The Performance Foundation

I chose Rust with Axum 0.8.3 as my core framework, and it's already paying dividends. Rust compiles to native machine code with zero-cost abstractions — meaning I get C-level performance without sacrificing developer productivity. My codebase uses async functions, ensuring every I/O operation is non-blocking.

Axum runs on the Tokio async runtime, giving me true concurrent request handling. While other frameworks interpret bytecode or manage garbage collection, Rust API runs as optimized native code from day one.

Database Layer: Engineered for Speed

My PostgreSQL setup with SQLx is where the real magic happens:

- Type-safe queries: All database queries are validated at compile time, eliminating runtime parsing overhead

- Health checks: Every connection is tested before use, preventing failed requests

The connection pool includes exponential backoff retry logic, so even during database hiccups, the app maintain performance and reliability.

Architecture Designed for Scale

My modular architecture separates concerns across 9 core modules:

- Authentication & user management

- Multi-tenant shop system

- Real-time inventory tracking

- Order processing & fulfillment

- File management with Google Cloud Storage

Each module follows a clean controller → service → model pattern, with compile-time verified SQL queries ensuring zero runtime surprises.

Security Without Performance Compromise

Authentication uses JWT tokens with industry standard encryption, supporting both Bearer tokens and secure HTTP-only cookies. My custom middleware handles auth guards and request logging without adding measurable latency.

CORS is properly configured for cross-origin requests with credential support, essential for my multi-domain setup.

Development & Deployment Optimizations

My development workflow is built for speed:

- Hot reload with cargo watch for instant feedback during development

- One-command deployment: just deploy handles git pull, compilation, migrations, and service restart

- Comprehensive testing covering authentication, inventory, orders, and shop management

- Zero-downtime migrations using SQLx migrate

The staging environment runs on systemd with automatic service management.

What I am Seeing in Staging

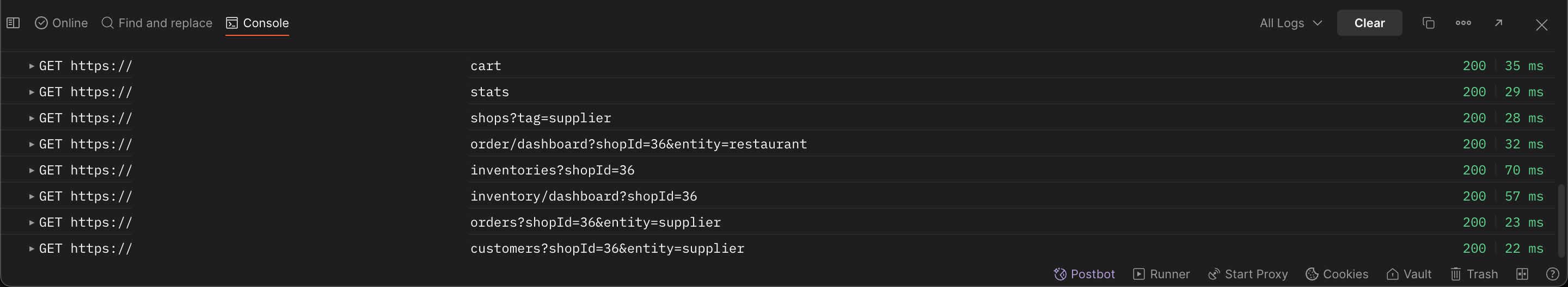

Current performance metrics show:

- ~70ms average response times across most endpoints

- Zero connection pool exhaustion under current load testing

- Consistent memory usage with no garbage collection pauses

Production Traffic Expectations

Based on my architecture choices, here's what I expect when real traffic arrives:

Advantages I'll maintain:

- Native code performance won't degrade with load

- Connection pooling will efficiently handle concurrent requests

- Async/await design scales naturally with request volume

- Type-safe queries eliminate a whole class of runtime errors

Areas I'm monitoring:

- Database connection scaling (currently configured for moderate load)

- Google Cloud Storage integration under high file upload volumes

- JWT validation performance with increased authentication requests

My scaling plan:

- Horizontal scaling: My stateless design makes adding instances trivial

- Database optimization: Query analysis and indexing based on real usage patterns

- Connection pool tuning: Adjusting pool size based on actual concurrent load

- CDN integration: Offloading static assets and API caching where appropriate

The Rust Advantage in Production

Rust's compile-time guarantees mean my staging performance translates directly to production — no surprises, no performance regressions from runtime optimizations failing under load.

The combination of zero-cost abstractions, efficient memory management, and Axum's lightweight design gives me confidence that fast response times will hold steady as traffic scales.

Thanks for reading. Cheers.